(Image source: Apple Pixar’s)

Apple has always been at the forefront of blending technology with artistry, and its latest research robot is no exception. Drawing inspiration from Pixar’s iconic animation style, this robot isn’t just a functional tool, it’s designed to move with lifelike fluidity and charm.

The influence of Pixar’s storytelling and character design brings a unique personality to the robot, making it more approachable and intuitive to interact with. But why would Apple, a tech giant, look to an animation studio for design cues?

Why Apple Turned to Pixar for Inspiration?

(Image source: Apple Pixar’s)

In early February 2025, Apple unveiled a research prototype of a Pixar-inspired robot lamp, capturing the tech world’s attention. The design, reminiscent of Pixar’s iconic Luxo Jr., features expressive, lifelike movements aimed at exploring how robots can interact more naturally with humans. Apple researchers shared a detailed paper and an accompanying video showcasing the robot’s ability to tilt, swivel, and emote through subtle animations, bringing an almost playful personality to the machine.

By incorporating animation techniques like squash, stretch, and anticipation, the robot can tilt its head in curiosity or lean back in surprise, making interactions intuitive. It uses fluid motions and dynamic facial displays to express joy, confusion, or attentiveness, mimicking the emotional nuance that makes Pixar characters so relatable.

At first glance, it might seem surprising that Apple, a company renowned for sleek, minimalist hardware, would look to an animation studio for inspiration when designing a research robot. But Pixar’s mastery of storytelling and lifelike character animation made it the perfect creative influence.

The collaboration isn’t as unlikely as it seems, either. Steve Jobs, Apple’s co-founder, played a pivotal role in Pixar’s early success, so this creative bridge has existed for decades. By channeling Pixar’s approach to building emotionally resonant characters, Apple’s engineers are crafting a robot that feels less like a cold machine and more like a helpful, animated companion.

ELEGNT, Apple’s Movement Design Approach

(Image source: Apple Pixar’s)

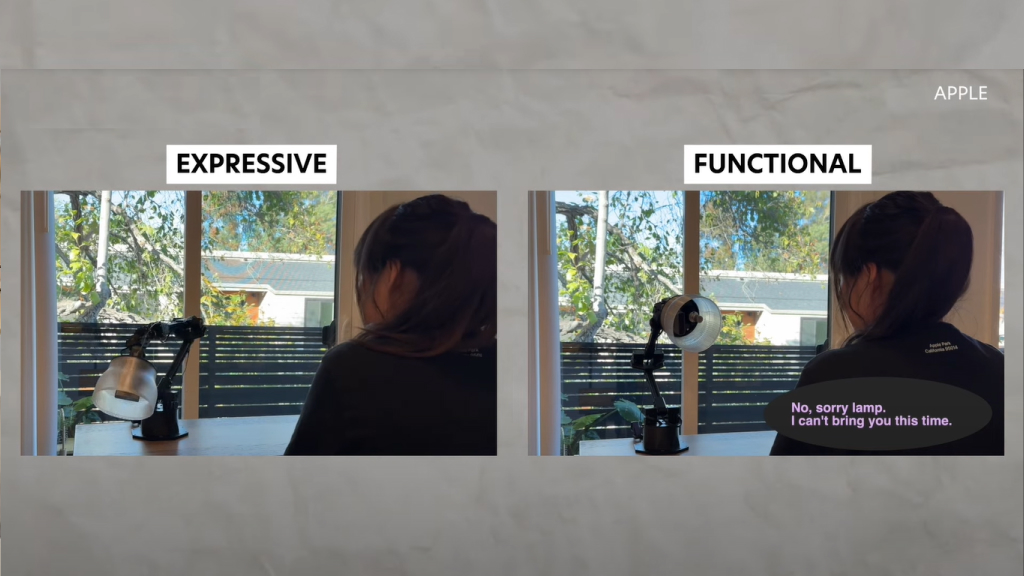

At the core of Apple’s Pixar-inspired robot is a groundbreaking movement framework called ELEGNT, short for Expressive and Functional Movement Design for Non-Anthropomorphic Robots. A six task scenario was designed to test Apple’s Pixar-inspired robot, conducted by Apple’s research team. The team detailed their work in a research paper that was published in early 2025, and is available on Apple’s Machine Learning Research website.

The research was conducted to explore how expressive movement could enhance human-robot interaction, especially in non-anthropomorphic designs. The goal was to test and see how body language alone could make a robot feel more relatable and emotionally intelligent.

The six task scenarios Apple used were:

1. Playing Music and Dancing

This scenario tested how well the robot could use movement to enhance entertainment, turning a simple audio experience into an interactive, almost companion-like event. The robot’s playful side responded with dynamic, rhythmic movements, by swaying, bouncing, and dancing to the beat.

2. Projecting Images and Adjusting Angle

Equipped with a built-in projector, the robot demonstrated its ability to cast images onto walls or surfaces. It dynamically adjusted its position, tilting or rotating its head to optimize projection angles.

3. Answering Questions with Expressive Feedback

When answering questions, the robot combined voice-based responses with physical gestures. These physical reactions added emotional depth to the interaction, reinforcing responses with natural, human-like body language.

4. Illuminating Surfaces with Purpose

The robot served as a responsive light source, demonstrating the robot’s ability to blend functionality with gentle, intentional movement, enhancing its role as an adaptive tool.

5. Following Users and Tracking Motion

The robot was tested on its ability to follow users as they moved around a room. It smoothly rotated to maintain eye contact, subtly leaning in or out depending on proximity. This scenario evaluated how well the robot could use body language to signal attentiveness, making the user feel seen and acknowledged even without facial features.

6. Expressing Emotion Through Gestures

In the final test, researchers explored how the robot could express emotions through movement alone. It performed gestures to simulate emotions like curiosity (leaning forward), surprise (quick recoil), and happiness (gentle bouncing). This scenario helped refine ELEGNT’s motion library, proving that even simple, non-humanoid movements could convey a rich spectrum of emotions.

Features and Capabilities

(Image source: Apple Pixar’s)

Beneath the Pixar-inspired charm lies an impressive suite of advanced features and cutting-edge capabilities. Designed to assist in product testing, environmental research, and more, this robot embodies Apple’s signature blend of innovation and intuitive design. Some of its key features and technical capabilities are:

1. Expressive, Animated Movements

Thanks to Pixar’s influence, the robot’s movements are governed by advanced motion control algorithms that mimic natural, lifelike behaviors. It uses a combination of gyroscopic sensors, dynamic actuators, and real-time motion tracking to create smooth, character-like gestures. This makes human-robot interaction feel surprisingly intuitive.

2. Neural Engine-Powered AI and Machine Learning

The robot is powered by Apple’s custom silicon, likely an M-series chip, featuring a dedicated Neural Engine for on-device machine learning. This allows the robot to process data locally, reducing latency and enhancing its ability to learn from interactions in real time. It can recognize complex visual patterns, analyze speech, and adapt its responses without needing to constantly ping external servers.

3. High-Precision Haptic Feedback and Tactile Sensors

Its multi-jointed arms are equipped with an array of pressure and touch sensors, enabling delicate object manipulation. The robot can detect minute differences in texture, weight, and material composition, making it ideal for detailed tasks like testing product durability or assembling intricate components.

4. LiDAR, Depth Sensing, and Spatial Awareness

Built with Apple’s industry-leading LiDAR technology, the same tech used in iPhones and iPads, the robot can map its environment with millimeter precision. It combines this with stereo cameras and ultrasonic sensors to navigate dynamic spaces, avoid obstacles, and interact with objects in three dimensions.

5. Seamless Ecosystem Integration with Ultra-Wideband (UWB)

Beyond simple wireless connectivity, the robot supports Ultra-Wideband (UWB) for pinpoint spatial awareness within Apple’s ecosystem. It can communicate with nearby devices, sync data in real time, and even respond to gestures or voice commands through an Apple Watch or iPhone.

6. Natural Language Understanding (NLU) and Voice Interaction

Powered by a refined version of Apple’s on-device Siri engine, the robot can process and understand natural language commands with incredible accuracy. It uses advanced speech recognition and sentiment analysis to interpret tone and context, allowing it to respond in an emotionally aware manner.

7. Modular and Upgradeable Architecture

The robot is designed with longevity in mind. Its hardware components, from sensors to actuators, are modular and easily replaceable. Researchers can swap out parts, add new sensors, or upgrade processing power as technology evolves, ensuring the robot remains cutting-edge for years to come.

8. Energy Efficiency and Sustainable Design

True to Apple’s sustainability efforts, the robot is built with recycled aluminum and responsibly sourced rare earth elements. It’s powered by a high-capacity, long-life lithium-iron-phosphate (LiFePO4) battery and includes intelligent energy management systems to optimize power consumption based on activity.

What This Means for Consumers and Developers

(Image source: Apple Pixar’s)

Apple’s Pixar-inspired robot hints at a future where robots seamlessly integrate into our lives, not as cold, mechanical tools but as emotionally intelligent companions. This breakthrough has powerful implications for both consumers and developers, potentially reshaping the way we interact with technology.

For consumers, Apple’s robot could redefine smart living. Imagine a home assistant that doesn’t just follow commands but understands your emotions through subtle cues. If you come home after a long day, the robot might dim the lights, play calming music, and lower its posture to show empathy. Or during a workout, it could cheer you on with upbeat movements and celebratory gestures when you hit milestones.

For developers, this robot represents an entirely new platform to build on, one that combines physical hardware, machine learning, and expressive animation. With Apple’s ecosystem, developers could create apps that leverage the robot’s advanced spatial awareness and motion capabilities.

Developers could build mindfulness apps where the robot gently sways to guide breathing exercises or changes its lighting to signal different meditation stages. The robot hints at a future where machines don’t just serve us but understand us, responding in ways that make technology feel like a natural, even joyful, part of everyday life.